Data management and workflow

We love helping experts in specialised fields to get the best results out of very large volumes of marine data. We create systems that reduce administrative overhead, reduce the need for training in “the computer system”, and help to locate and correct data problems or prevent them occurring in the first place. With the time-consuming details dealt with automatically, experts are free to focus on solving higher-level business or research problems. We can also increase the value of data by giving confidence in its provenance.

How we can help

We have developed custom systems to help with:

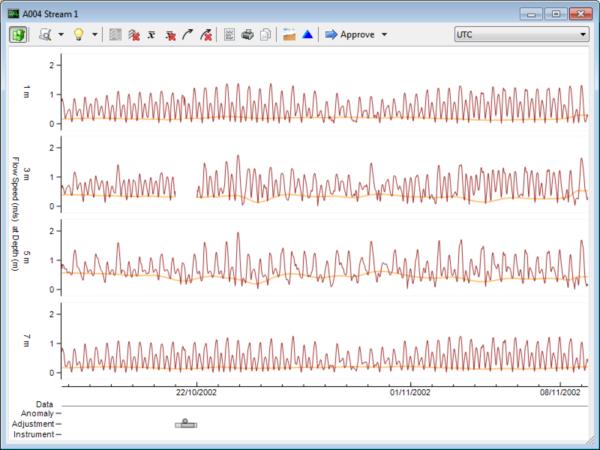

- Bringing together a wide variety of information, such as sensor outputs, PDF documents, images, chart data, and so on

- Tracing outputs back to the source of the raw data, including all the corrections, updates, reviews, checks, and calculations that contributed to the final result

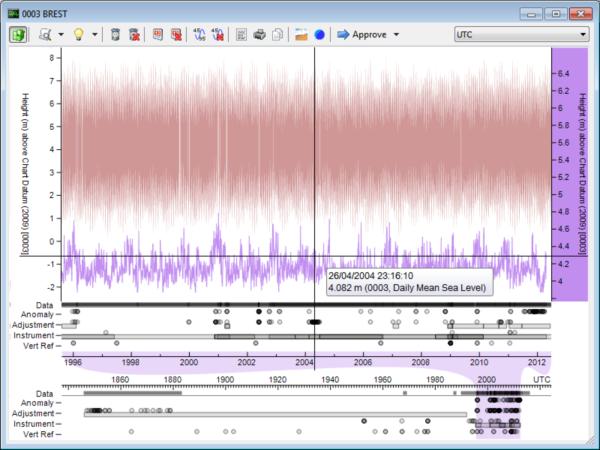

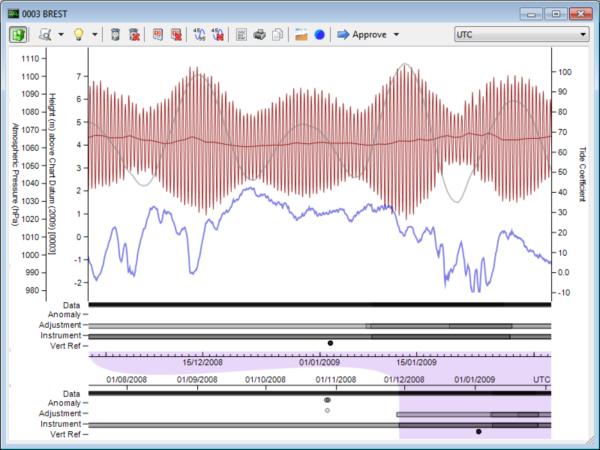

- Visualising data to help expert staff with analysis

- Managing the flow of tasks around different members of staff in an organisation

- Producing print-ready books and reports, as well as automatic online data distribution

- Automatically identifying potential data problems

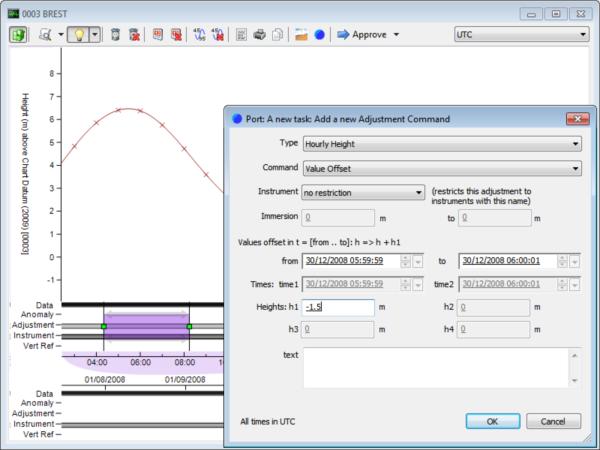

- Simplifying the correction of data problems, for example, sensor clock drift

Publishing

Publishing data can be a difficult and time-consuming job, and it can take a long time to get it right the first time. Managing a team of people who are trying to add new data while someone else is trying to make last minute corrections to a publication can be difficult and lead to incorrect publications.

We can solve these issues by giving you full control over exactly which subset of your data will end up in a publication and who can change that subset at any given time.

Data provenance

Understanding the origin of the collected data can be difficult, and it raises many questions. What was the accuracy of the instrument that gathered the data? Are there problems in the data caused by sensor errors? Have the problems been removed, and can it be verified that the problems were removed correctly? How can one effectively prevent the accidental use of "dirty" data in calculations?

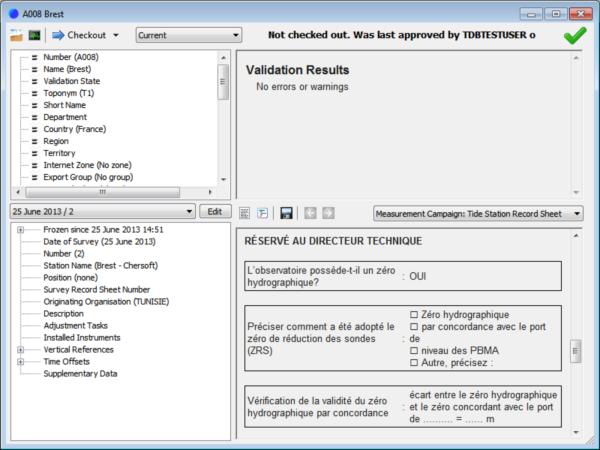

We can answer and resolve these questions as our system allows users to navigate from the outputs of calculations all the way back to the details of the sensor that gathered the data, and the exact raw data that was gathered. Any corrections and adjustments applied to the raw data are obvious in the results. To secure the results even further, workflow can be introduced, requiring another person to check any changes.

In addition, we can provide tools for remote site usage to catalogue gathered data immediately, so that it can be correctly added to the system days, weeks or even months later.

An example

Sarah collects data from Tide Gauge 3638. Later that week when she is moored somewhere with wifi, she uploads her gathered data into the system. Ben is in the main office, and sees that the system has flagged up a day where the tidal heights suddenly jump by 3cm. Ben gives Sarah a call; she arranges a detour back to the tide gauge and finds that indeed it has been knocked and is 3cm from its intended location. Sarah repositions the gauge. Meanwhile Ben applies a 3cm adjustment to the erroneous section of the data and marks the data as pending Amal’s approval.

The dataset appears on Amal’s work list. She reads Ben’s note about the correction, and brings up the corrected data on a graph over the raw data and the predicted tide heights for the same period, to satisfy herself that the correction is reasonable. She marks the corrections as “approved” and attaches Sarah’s report on the tide gauge fault. The corrected data will now be taken into account next time tidal harmonics are generated.